Natural Language Understanding (LUIS)

/Content

- What is LUIS?

- Key Concepts

- API Key Limits

- Relationships

- Video

- Demo: Build a Language Understanding Application with Music Skills

- Demo: Teach LUIS to recognise a New Entity (Song)

- Demo: Train LUIS to be more accurate (Python)

1. What is LUIS?

Language Understanding Intelligent Service (LUIS) is a machine learning based offering that forms part of Microsoft's Cognitive Services suite. LUIS is capable of understanding a users intent and deriving key pieces of information (entities) from natural language when provided input in the form of text. Combined with other services such as Azure Bot Service and the Bing Speech API, together, can enable conversation based interfaces (e.g. chatbots).

2. Key Concepts

Utterance

A sequence of continuous speech followed by a clear pause. In the context of LUIS, utterances are used as input to predict intent and entities.

Intents

An intent represent a task or an action that the user wants to perform. From a developers perspective, intents typically translate to the eventual invocation of a method. For example: Input Text = "Play Billie Jean by Michael Jackson"; Intent = "Play Music"; Method Invoked = playMusic(song).

Entities

Entities are the key pieces of information that we want to extract from utterances. From a developers perspective, entities can be thought as variables. For example: Input Text = "Play Billie Jean by Michael Jackson"; Entities include: Song (Billie Jean) and Artist (Michael Jackson).

Phrase List

A phrase list is a grouping mechanism (e.g. Name: Cities; Values: London, Paris, Melbourne, Berlin) which provides LUIS a hint to treat these values similarly.

Note: Phrase lists can be flagged as "interchangeable" or "non-interchangeable".

- Interchangeable refers to phrase lists that contain synonyms (e.g. create, generate, produce, make).

- Non-Interchangeable refers to phrase lists that contain values that are similar in other ways (e.g. Arsenal, Chelsea, Liverpool, Tottenham).

3. Relationships

The following diagram illustrates relationships between our core elements.

4. API Key Limits

Once a LUIS resource has been created via the Azure Portal (as I will demonstrate later in the demo), the API key needs to be added to the LUIS application so we can query our LUIS endpoint. Note: LUIS applications come with a "Starter Key" by default, the purpose of this key is for authoring purposes only.

- Free Tier: 10,000 transactions per month (maximum of 5 calls per second).

- Basic Tier: 1 million transactions per month (maximum of 50 calls per second).

5. Demo [Video]

In this demo, we will create a LUIS application that can derive intent and entities within the music domain. Fortunately, LUIS has a number of prebuilt domains which is a great way to get started quickly.

Note: The demo has been broken into three parts. If you skip down to part 6 of this post, there are also step by step instructions available.

6. Demo: Build a Language Understanding Application with Music Skills

1. Create a Language Understanding (LUIS) resource within the Azure Portal. We will revisit this resource when we need to retrieve an API key to use in conjunction with a published application.

2. Login to https://www.luis.ai and create a new application. Note: You may need to sign up for a LUIS account.

3. Add a Prebuilt Domain (Prebuilt Domains > Search "Music" > Click [Add Domain]).

Note: The domain will have been successfully added once the "Remove Domain" button appears, this can take up to a minute.

You'll notice that your application now contains Intents, Entities and Utterances focused on the Music domain (e.g. Play Music, Increase Volume, Genre, Artist).

4. Train the application.

Before we can execute any tests, we will need to train our application based on the content (Intents, Entities and Utterances) provided by the prebuilt domain. Once trained, we can test the applications ability to understand certain phrases (e.g. Input: "Play Drake's playlist"; Intent: Play Music; Artist: Drake;).

Relationships Visualised

- Our Application (Music) has many Intents.

- Our Intents (e.g. Music.PlayMusic) has many Utterances.

- Each Utterance (e.g. play me a blues song) can have zero or more Entities (e.g. Music.Genre).

5. Publish the application and add your API Key.

- Production or Staging: Each application has two slots available, this allows developers to deploy and test a non-production endpoint.

- Include all predicted intent scores: Checking this option will alter the endpoint to include "verbose=true" (i.e. all possible intents).

- Enable Bing spell checker: Checking this option will alter the endpoint to include "bing-spell-check-subscription-key={YOUR_BING_KEY_HERE}". This enhances the intelligence of the application to autocorrect spelling mistakes before processing.

- Resources and Keys: It is within this section that you can add your API key from the LUIS resource we created in Step 1.

Important: The region of the LUIS resource must match the region the key is being added to within the Publish screen.

Test the endpoint:

- Copy and paste the URL into your browser.

- Update the query url parameter (e.g. &q=play%20%billie%20jean%20by%20michael%20jackson).

- View the JSON response.

7. Demo: Teach LUIS to recognise a New Entity (Song)

As you may have noticed, while our application is able to derive Genre and Artist, we are missing Song. Follow the steps below to learn how to add a new entity, associate the entity with utterances and invoke LUIS's ability to perform "active learning".

1. Create a New Entity.

2. Label an Utterance.

Navigate to Intents > Music.PlayMusic. In the text box type an example utterance that includes a song name (e.g. "Play Hotline Bling by Drake"), hit enter and label the entities by clicking on them. Once the new utterance is labeled, re-train and test the application.

Notice the difference in results between the updated application vs. the version that is currently published.

3. Active Learning

While our application can now correctly understand the phrase "Play Hotline Bling by Drake", if we test another phrase (e.g. "Play Humble by Kendrick Lamar"), the application fails to recognise the song. Fortunately, as part of LUIS's ability to actively learn, we can review any queries that have hit the endpoint and validate them via the "Review endpoint utterances section".

While this is great, we obviously don't want to submit and review every possible song, this is not the expectation. Provided enough examples, LUIS begins to learn and establish patterns. That said, we need to provide a lot more utterances. I'll demonstrate how to hit the endpoint programmatically using Python then we'll revert to our application to review.

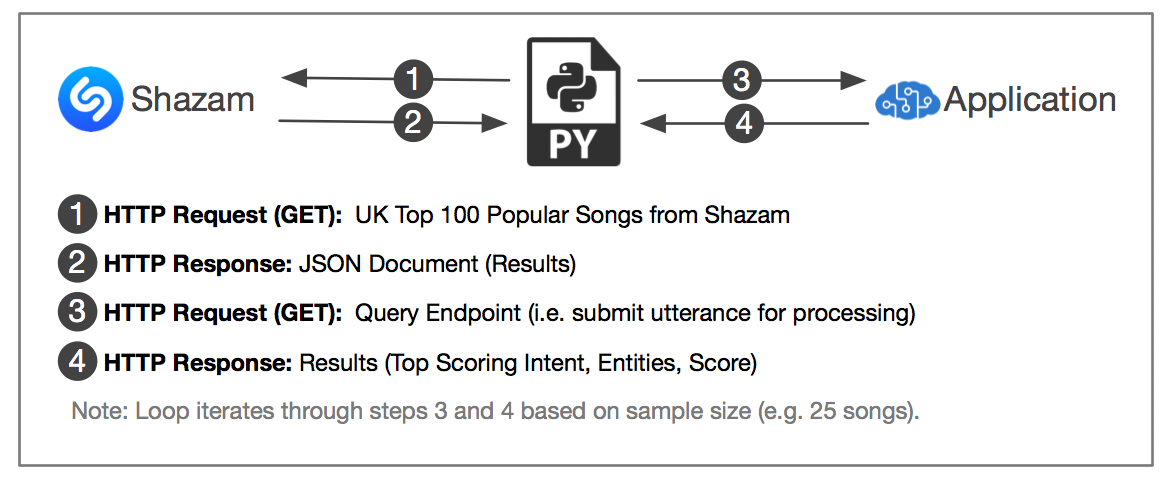

8. Demo: Train LUIS to be more accurate (Python)

For this demo, we will use Python to programmatically hit our endpoint using data from Shazam in two passes.

Pass 1

- Query the application with the first 25 songs (1 - 25).

- Review the endpoint utterances and update the entity labels.

- Re-train and publish the updated model.

Pass 2

- Query the application with the next 25 songs (26 - 50).

- Review the endpoint utterances.

We should notice a significant improvement in the applications ability to derive the correct entities.

High Level Flow

Steps: Pass 1

- Create a virtual environment with Python 3 and the requests library.

- Copy and paste the code into a python file (e.g. luis.py).

- Update APP_ID and KEY variables with your own values.

- Execute the script.

- Review the endpoint utterances in the LUIS web application.

- Update the entity labels, train and re-publish.

Steps: Pass 2

- Update the Python script to shift the sample size (e.g. MIN = 26, MAX = 50).

- Execute the script.

- Review the results, there should be a notable increase in accuracy.

Code

import json

import requests

ENDPOINT = 'https://westus.api.cognitive.microsoft.com/luis/v2.0/apps/'

APP_ID = 'YOUR_APP_ID_HERE'

KEY = 'YOUR_API_KEY_HERE'

MIN = 0

MAX = 25

def handler():

# Get UK Top 100 Popular Songs from Shazam

shazam_url = 'https://www.shazam.com/shazam/v2/en/GB/web/-/tracks/web_chart_uk'

shazam_response = requests.get(shazam_url)

shazam_data = json.loads(shazam_response.content)

# Initialise counter

counter = 0

# Loop through each song

for song in shazam_data['chart']:

counter += 1

if MIN < counter <= MAX:

song_title = song['heading']['title']

song_artist = song['heading']['subtitle']

luis_query = u'Play {0} by {1}'.format(song_title, song_artist)

print('[{0}]--------------------------------------'.format(counter))

understand(luis_query)

elif counter > MAX:

break

else:

pass

def understand(query):

# Initialise URL, HTTP Header and URL Query Parameters

url = ENDPOINT + APP_ID

headers = {

'Ocp-Apim-Subscription-Key': KEY

}

params = {

'q': query,

'timezoneOffset': '0',

'verbose': 'false',

'spellCheck': 'false',

'staging': 'false'

}

# Query Endpoint

response = requests.get(url, headers=headers, params=params)

data = json.loads(response.content)

# Print Results

print(u'Utterance:\n\t{0}'.format(data['query']))

print(u'Intent:\n\t{0} ({1})'.format(

data['topScoringIntent']['intent'],

data['topScoringIntent']['score'],

))

for entity in data['entities']:

print(u'Entity:\n\t{0}:{1} {2}'.format(

entity['type'],

entity['entity'],

entity['score']

))

if __name__ == '__main__':

handler()Note: You would have gleaned that obviously the more examples we can feed LUIS, the higher the accuracy in being able to predict entities and intent. There are other ways to work with LUIS more efficiently (e.g. client SDK, batch testing, etc). I won't go over these other methods in this post but are worth checking out.

That's it! Hopefully, this gives you a good starting point on how to get started with LUIS and potentially a thought provoker on what might be possible if this capability was to be used in conjunction with other services...

-fin-